Binwalk is a super useful tool that searches a given file’s contents and allows you to analyze and extract them. A common use for this is reverse engineering binaries to discover hidden data. That said, you can also use this to identify files that are using the wrong extensions, find hidden files in various file types (PowerPoint, for example is a collection of files), and extract said files.

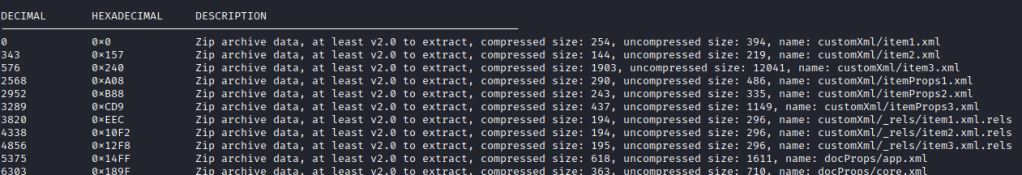

To start with, using binwalk (filename) will display information about the file such as the different files that make up the original file. Let’s take PowerPoint for example. If you use binwalk example.pptx you will get some variation of the following:

This is because a .pptx file contains a lot of different files that make up the final presentation. The view above shows the contents of the pptx file as well as the offset at which each file begins in both decimal and hexadecimal.

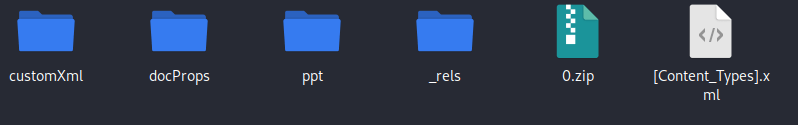

If, for example, you weren’t sure where to look, but had an idea that perhaps there was a hidden file located with the original file, you could use binwalk -e to extract all of the files it found. In the example above, it would extract the zip files and all of the .xml files within those zip files in a folder. This may look like the below:

You can then search for the hidden file or flag, if this was a CTF.

If, however, you only want to extract some, or certain files (in this case PNGs), you can use the binwalk –dd ‘png image:png’ filename. This will extract all PNG files from the original filename. You can also use ‘binwalk –dd=’.*’ filename‘ to extract ALL files from the original filename. Sometimes I find this has more luck that a generic binwalk -e.